Chapter 15 Memory Efficiency

As put by Kane et al. (2013), it was quite puzzling when very few of the competitors, for the Million dollars prize in the Netflix challenge, were statisticians. This is perhaps because the statistical community historically uses SAS, SPSS, and R. The first two tools are very well equipped to deal with big data, but are very unfriendly when trying to implement a new method. R, on the other hand, is very friendly for innovation, but was not equipped to deal with the large data sets of the Netflix challenge. A lot has changed in R since 2006. This is the topic of this chapter.

As we have seen in the Sparsity Chapter 14, an efficient representation of your data in RAM will reduce computing time, and will allow you to fit models that would otherwise require tremendous amounts of RAM. Not all problems are sparse however. It is also possible that your data does not fit in RAM, even if sparse. There are several scenarios to consider:

- Your data fits in RAM, but is too big to compute with.

- Your data does not fit in RAM, but fits in your local storage (HD, SSD, etc.)

- Your data does not fit in your local storage.

If your data fits in RAM, but is too large to compute with, a solution is to replace the algorithm you are using. Instead of computing with the whole data, your algorithm will compute with parts of the data, also called chunks, or batches. These algorithms are known as external memory algorithms (EMA), or batch processing.

If your data does not fit in RAM, but fits in your local storage, you have two options. The first is to save your data in a database management system (DBMS). This will allow you to use the algorithms provided by your DBMS, or let R use an EMA while “chunking” from your DBMS. Alternatively, and preferably, you may avoid using a DBMS, and work with the data directly form your local storage by saving your data in some efficient manner.

Finally, if your data does not fit on you local storage, you will need some external storage solution such as a distributed DBMS, or distributed file system.

15.1 Efficient Computing from RAM

If our data can fit in RAM, but is still too large to compute with it (recall that fitting a model requires roughly 5-10 times more memory than saving it), there are several facilities to be used. The first, is the sparse representation discussed in Chapter 14, which is relevant when you have factors, which will typically map to sparse model matrices. Another way is to use external memory algorithms (EMA).

The biglm::biglm function provides an EMA for linear regression.

The following if taken from the function’s example.

data(trees)

ff<-log(Volume)~log(Girth)+log(Height)

chunk1<-trees[1:10,]

chunk2<-trees[11:20,]

chunk3<-trees[21:31,]

library(biglm)

a <- biglm(ff,chunk1)

a <- update(a,chunk2)

a <- update(a,chunk3)

coef(a)## (Intercept) log(Girth) log(Height)

## -6.631617 1.982650 1.117123Things to note:

- The data has been chunked along rows.

- The initial fit is done with the

biglmfunction. - The model is updated with further chunks using the

updatefunction.

We now compare it to the in-memory version of lm to verify the results are the same.

b <- lm(ff, data=trees)

rbind(coef(a),coef(b))## (Intercept) log(Girth) log(Height)

## [1,] -6.631617 1.98265 1.117123

## [2,] -6.631617 1.98265 1.117123Other packages that follow these lines, particularly with classification using SVMs, are LiblineaR, and RSofia.

15.1.1 Summary Statistics from RAM

If you are not going to do any model fitting, and all you want is efficient filtering, selection and summary statistics, then a lot of my warnings above are irrelevant. For these purposes, the facilities provided by base, stats, and dplyr are probably enough. If the data is large, however, these facilities may be too slow. If your data fits into RAM, but speed bothers you, take a look at the data.table package. The syntax is less friendly than dplyr, but data.table is BLAZING FAST compared to competitors. Here is a little benchmark27.

First, we setup the data.

library(data.table)

n <- 1e6 # number of rows

k <- c(200,500) # number of distinct values for each 'group_by' variable

p <- 3 # number of variables to summarize

L1 <- sapply(k, function(x) as.character(sample(1:x, n, replace = TRUE) ))

L2 <- sapply(1:p, function(x) rnorm(n) )

tbl <- data.table(L1,L2) %>%

setnames(c(paste("v",1:length(k),sep=""), paste("x",1:p,sep="") ))

tbl_dt <- tbl

tbl_df <- tbl %>% as.data.frameWe compare the aggregation speeds. Here is the timing for dplyr.

library(dplyr)

system.time( tbl_df %>%

group_by(v1,v2) %>%

summarize(

x1 = sum(abs(x1)),

x2 = sum(abs(x2)),

x3 = sum(abs(x3))

)

)## user system elapsed

## 0.896 0.016 0.973And now the timing for data.table.

system.time(

tbl_dt[ , .( x1 = sum(abs(x1)), x2 = sum(abs(x2)), x3 = sum(abs(x3)) ), .(v1,v2)]

)## user system elapsed

## 0.850 0.144 0.866The winner is obvious. Let’s compare filtering (i.e. row subsets, i.e. SQL’s SELECT).

system.time(

tbl_df %>% filter(v1 == "1")

)## user system elapsed

## 0.321 0.111 0.432system.time(

tbl_dt[v1 == "1"]

)## user system elapsed

## 0.047 0.073 0.08915.2 Computing from a Database

The early solutions to oversized data relied on storing your data in some DBMS such as MySQL, PostgresSQL, SQLite, H2, Oracle, etc. Several R packages provide interfaces to these DBMSs, such as sqldf, RDBI, RSQite. Some will even include the DBMS as part of the package itself.

Storing your data in a DBMS has the advantage that you can typically rely on DBMS providers to include very efficient algorithms for the queries they support. On the downside, SQL queries may include a lot of summary statistics, but will rarely include model fitting28. This means that even for simple things like linear models, you will have to revert to R’s facilities– typically some sort of EMA with chunking from the DBMS. For this reason, and others, we prefer to compute from efficient file structures, as described in Section 15.3.

If, however, you have a powerful DBMS around, or you only need summary statistics, or you are an SQL master, keep reading.

The package RSQLite includes an SQLite server, which we now setup for demonstration. The package dplyr, discussed in the Hadleyverse Chapter 21, will take care of translating the dplyr syntax, to the SQL syntax of the DBMS. The following example is taken from the dplyr Databases vignette.

library(RSQLite)

library(dplyr)

file.remove('my_db.sqlite3')

my_db <- src_sqlite(path = "my_db.sqlite3", create = TRUE)

library(nycflights13)

flights_sqlite <- copy_to(

dest= my_db,

df= flights,

temporary = FALSE,

indexes = list(c("year", "month", "day"), "carrier", "tailnum"))Things to note:

src_sqliteto start an empty table, managed by SQLite, at the desired path.copy_tocopies data from R to the database.- Typically, setting up a DBMS like this makes no sense, since it requires loading the data into RAM, which is precisely what we want to avoid.

We can now start querying the DBMS.

select(flights_sqlite, year:day, dep_delay, arr_delay)filter(flights_sqlite, dep_delay > 240)15.3 Computing From Efficient File Structrures

It is possible to save your data on your storage device, without the DBMS layer to manage it. This has several advantages:

- You don’t need to manage a DBMS.

- You don’t have the computational overhead of the DBMS.

- You may optimize the file structure for statistical modelling, and not for join and summary operations, as in relational DBMSs.

There are several facilities that allow you to save and compute directly from your storage:

Memory Mapping: Where RAM addresses are mapped to a file on your storage. This extends the RAM to the capacity of your storage (HD, SSD,…). Performance slightly deteriorates, but the access is typically very fast. This approach is implemented in the bigmemory package.

Efficient Binaries: Where the data is stored as a file on the storage device. The file is binary, with a well designed structure, so that chunking is easy. This approach is implemented in the ff package, and the commercial RevoScaleR package.

Your algorithms need to be aware of the facility you are using. For this reason each facility ( bigmemory, ff, RevoScaleR,…) has an eco-system of packages that implement various statistical methods using that facility. As a general rule, you can see which package builds on a package using the Reverse Depends entry in the package description. For the bigmemory package, for instance, we can see that the packages bigalgebra, biganalytics, bigFastlm, biglasso, bigpca, bigtabulate, GHap, and oem, build upon it. We can expect this list to expand.

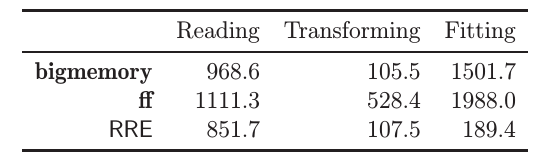

Here is a benchmark result, from Wang et al. (2015).

It can be seen that ff and bigmemory have similar performance, while RevoScaleR (RRE in the figure) outperforms them.

This has to do both with the efficiency of the binary representation, but also because RevoScaleR is inherently parallel.

More on this in the Parallelization Chapter 16.

15.3.1 bigmemory

We now demonstrate the workflow of the bigmemory package.

We will see that bigmemory, with it’s big.matrix object is a very powerful mechanism.

If you deal with big numeric matrices, you will find it very useful.

If you deal with big data frames, or any other non-numeric matrix, bigmemory may not be the appropriate tool, and you should try ff, or the commercial RevoScaleR.

# download.file("http://www.cms.gov/Research-Statistics-Data-and-Systems/Statistics-Trends-and-Reports/BSAPUFS/Downloads/2010_Carrier_PUF.zip", "2010_Carrier_PUF.zip")

# unzip(zipfile="2010_Carrier_PUF.zip")

library(bigmemory)

x <- read.big.matrix("data/2010_BSA_Carrier_PUF.csv", header = TRUE,

backingfile = "airline.bin",

descriptorfile = "airline.desc",

type = "integer")

dim(x)## [1] 2801660 11pryr::object_size(x)## 696 Bclass(x)## [1] "big.matrix"

## attr(,"package")

## [1] "bigmemory"Things to note:

- The basic building block of the bigmemory ecosystem, is the

big.matrixclass, we constructed withread.big.matrix. read.big.matrixhandles the import to R, and the saving to a memory mapped file. The implementation is such that at no point does R hold the data in RAM.- The memory mapped file will be there after the session is over. It can thus be called by other R sessions using

attach.big.matrix("airline.desc"). This will be useful when parallelizing. pryr::object_sizereturn the size of the object. Sincexholds only the memory mappings, it is much smaller than the 100MB of data that it holds.

We can now start computing with the data.

Many statistical procedures for the big.matrix object are provided by the biganalytics package.

In particular, the biglm.big.matrix and bigglm.big.matrix functions, provide an interface from big.matrix objects, to the EMA linear models in biglm::biglm and biglm::bigglm.

library(biganalytics)

biglm.2 <- bigglm.big.matrix(BENE_SEX_IDENT_CD~CAR_LINE_HCPCS_CD, data=x)

coef(biglm.2)## (Intercept) CAR_LINE_HCPCS_CD

## 1.537848e+00 1.210282e-07Other notable packages that operate with big.matrix objects include:

- bigtabulate: Extend the bigmemory package with ‘table’, ‘tapply’, and ‘split’ support for ‘big.matrix’ objects.

- bigalgebra: For matrix operation.

- bigpca: principle components analysis (PCA), or singular value decomposition (SVD).

- bigFastlm: for (fast) linear models.

- biglasso: extends lasso and elastic nets.

- GHap: Haplotype calling from phased SNP data.

15.3.2 bigstep

The bigstep package uses the bigmemory framework to perform stepwise model selction, when the data cannot fit into RAM.

TODO

15.4 ff

The ff packages replaces R’s in-RAM storage mechanism with on-disk (efficient) storage.

Unlike bigmemory, ff supports all of R vector types such as factors, and not only numeric.

Unlike big.matrix, which deals with (numeric) matrices, the ffdf class can deal with data frames.

Here is an example.

First open a connection to the file, without actually importing it using the LaF::laf_open_csv function.

.dat <- LaF::laf_open_csv(filename = "data/2010_BSA_Carrier_PUF.csv",

column_types = c("integer", "integer", "categorical", "categorical", "categorical", "integer", "integer", "categorical", "integer", "integer", "integer"),

column_names = c("sex", "age", "diagnose", "healthcare.procedure", "typeofservice", "service.count", "provider.type", "servicesprocessed", "place.served", "payment", "carrierline.count"),

skip = 1)Now write the data to local storage as an ff data frame, using laf_to_ffdf.

data.ffdf <- ffbase::laf_to_ffdf(laf = .dat)

head(data.ffdf)## ffdf (all open) dim=c(2801660,6), dimorder=c(1,2) row.names=NULL

## ffdf virtual mapping

## PhysicalName VirtualVmode PhysicalVmode AsIs

## sex sex integer integer FALSE

## age age integer integer FALSE

## diagnose diagnose integer integer FALSE

## healthcare.procedure healthcare.procedure integer integer FALSE

## typeofservice typeofservice integer integer FALSE

## service.count service.count integer integer FALSE

## VirtualIsMatrix PhysicalIsMatrix PhysicalElementNo

## sex FALSE FALSE 1

## age FALSE FALSE 2

## diagnose FALSE FALSE 3

## healthcare.procedure FALSE FALSE 4

## typeofservice FALSE FALSE 5

## service.count FALSE FALSE 6

## PhysicalFirstCol PhysicalLastCol PhysicalIsOpen

## sex 1 1 TRUE

## age 1 1 TRUE

## diagnose 1 1 TRUE

## healthcare.procedure 1 1 TRUE

## typeofservice 1 1 TRUE

## service.count 1 1 TRUE

## ffdf data

## sex age diagnose healthcare.procedure typeofservice

## 1 1 1 NA 99213 M1B

## 2 1 1 NA A0425 O1A

## 3 1 1 NA A0425 O1A

## 4 1 1 NA A0425 O1A

## 5 1 1 NA A0425 O1A

## 6 1 1 NA A0425 O1A

## 7 1 1 NA A0425 O1A

## 8 1 1 NA A0425 O1A

## : : : : : :

## 2801653 2 6 V82 85025 T1D

## 2801654 2 6 V82 87186 T1H

## 2801655 2 6 V82 99213 M1B

## 2801656 2 6 V82 99213 M1B

## 2801657 2 6 V82 A0429 O1A

## 2801658 2 6 V82 G0328 T1H

## 2801659 2 6 V86 80053 T1B

## 2801660 2 6 V88 76856 I3B

## service.count

## 1 1

## 2 1

## 3 1

## 4 2

## 5 2

## 6 3

## 7 3

## 8 4

## : :

## 2801653 1

## 2801654 1

## 2801655 1

## 2801656 1

## 2801657 1

## 2801658 1

## 2801659 1

## 2801660 1We can verify that the ffdf data frame has a small RAM footprint.

pryr::object_size(data.ffdf)## 392 kBThe ffbase package provides several statistical tools to compute with ff class objects.

Here is simple table.

ffbase::table.ff(data.ffdf$age) ##

## 1 2 3 4 5 6

## 517717 495315 492851 457643 419429 418705The EMA implementation of biglm::biglm and biglm::bigglm have their ff versions.

library(biglm)

mymodel.ffdf <- biglm(payment ~ factor(sex) + factor(age) + place.served,

data = data.ffdf)

summary(mymodel.ffdf)## Large data regression model: biglm(payment ~ factor(sex) + factor(age) + place.served, data = data.ffdf)

## Sample size = 2801660

## Coef (95% CI) SE p

## (Intercept) 97.3313 96.6412 98.0214 0.3450 0.0000

## factor(sex)2 -4.2272 -4.7169 -3.7375 0.2449 0.0000

## factor(age)2 3.8067 2.9966 4.6168 0.4050 0.0000

## factor(age)3 4.5958 3.7847 5.4070 0.4056 0.0000

## factor(age)4 3.8517 3.0248 4.6787 0.4135 0.0000

## factor(age)5 1.0498 0.2030 1.8965 0.4234 0.0132

## factor(age)6 -4.8313 -5.6788 -3.9837 0.4238 0.0000

## place.served -0.6132 -0.6253 -0.6012 0.0060 0.0000Things to note:

biglm::biglmnotices the input of of classffdfand calls the appropriate implementation.- The model formula,

payment ~ factor(sex) + factor(age) + place.served, includes factors which cause no difficulty. - You cannot inspect the factor coding (dummy? effect?) using

model.matrix. This is because EMAs never really construct the whole matrix, let alone, save it in memory.

15.5 disk.frame

15.6 matter

Memory-efficient reading, writing, and manipulation of structured binary data on disk as vectors, matrices, arrays, lists, and data frames.

TODO

15.7 iotools

A low level facility for connecting to on-disk binary storage.

Unlike ff, and bigmemory, it behaves like native R objects, with their copy-on-write policy.

Unlike reader, it allows chunking.

Unlike read.csv, it allows fast I/O.

iotools is thus a potentially very powerfull facility.

See Arnold, Kane, and Urbanek (2015) for details.

TODO

15.8 HDF5

Like ff, HDF5 is an on-disk efficient file format. The package h5 is interface to the “HDF5” library supporting fast storage and retrieval of R-objects like vectors, matrices and arrays.

TODO

15.9 DelayedArray

An abstraction layer for operations on array objects, which supports various backend storage of arrays such as:

- In RAM: base, Matrix, DelayedArray.

- In Disk: HDF5Array, matterArray.

Link Several application packages already build upon the DelayedArray pacakge:

- DelayedMatrixStats: Functions that Apply to Rows and Columns of DelayedArray Objects.

- beachmat C++ API for (most) DelayedMatrix backends.

15.10 Computing from a Distributed File System

If your data is SOOO big that it cannot fit on your local storage, you will need a distributed file system or DBMS. We do not cover this topic here, and refer the reader to the RHipe, RHadoop, and RSpark packages and references therein.

15.11 Bibliographic Notes

An absolute SUPERB review on computing with big data is Wang et al. (2015), and references therein (Kane et al. (2013) in particular). Michael Kane also reports his benchmarks of in-memory, vs. DBMS operations. Here is also an excellent talk by Charles DiMaggio. For an up-to-date list of the packages that deal with memory constraints, see the Large memory and out-of-memory data section in the High Performance Computing task view. For a list of resources to interface to DMBS, see the Databases with R task view. For more on data analysis from disk, and not from RAM, see Peter_Hickey’s JSM talk.

15.12 Practice Yourself

References

Arnold, Taylor, Michael Kane, and Simon Urbanek. 2015. “Iotools: High-Performance I/O Tools for R.” arXiv Preprint arXiv:1510.00041.

Kane, Michael J, John Emerson, Stephen Weston, and others. 2013. “Scalable Strategies for Computing with Massive Data.” Journal of Statistical Software 55 (14): 1–19.

Wang, Chun, Ming-Hui Chen, Elizabeth Schifano, Jing Wu, and Jun Yan. 2015. “Statistical Methods and Computing for Big Data.” arXiv Preprint arXiv:1502.07989.

The code was contributed by Liad Shekel.↩

This is slowly changing. Indeed, Microsoft’s SQL Server 2016 is already providing in-database-analytics, and other will surely follow.↩